Data Quality: Only Use Fresh Ingredients

This is the 2nd ingredients out of 5

Next, let’s talk about data quality—which is just like using fresh ingredients in cooking.

Imagine trying to cook a steak with expired meat—not only will it taste terrible, but it might also make you sick. The same goes for data. Poor-quality data leads to disastrous results.

Good data should be:

o Accurate—Data must be correct and free from errors.

o Complete—There should be no missing information.

o Up-to-date—Old data should be removed or updated.

If a customer’s address is incorrect, their package will be delivered to the wrong place. If their phone number is outdated, you won’t be able to contact them. Low-quality data creates headaches for businesses, and once you see the impact, you’ll want to fix it immediately!

1. Why Data Quality Matters

A friend of mine works at an e-commerce company, and one day, their data got so messy that their order system went into chaos. Some customers had duplicate names, and the warehouse staff had no idea which “John Smith” ordered what. It took them hours to figure it out. Only after implementing strict quality checks did they get things under control.

Another example: A café owner I know recorded customer preferences but didn’t check for data quality. One day, a customer who loved coffee was mistakenly listed as a tea lover, and they were served the wrong drink. The customer’s reaction? “What the heck? I hate tea!” That small mistake cost them a regular customer.

2. The Three Golden Rules of Data Quality

To maintain high-quality data, you need to focus on three key aspects:

o Accuracy – The data should be correct.

- Example: If an address is wrong, the package won’t reach the customer.

o Completeness – No missing data.

- Example: A customer record with just a name but no contact details is useless.

o Timeliness – Data must be up-to-date.

- Example: If you save a friend’s 5-year-old phone number, you won’t be able to reach them.

- If a customer has moved, but their old address is still in the system, the delivery will fail.

The Cost of Bad Data

Bad data leads to real-world problems:

– Incorrect shipments

– Lost customers

– Wasted time and money

Data quality isn’t just a technical issue—it’s the lifeblood of a business. Even the best marketing, analytics, and AI systems are useless if they rely on bad data.

Bottom line: Good data leads to better decisions, satisfied customers, and a more efficient business.

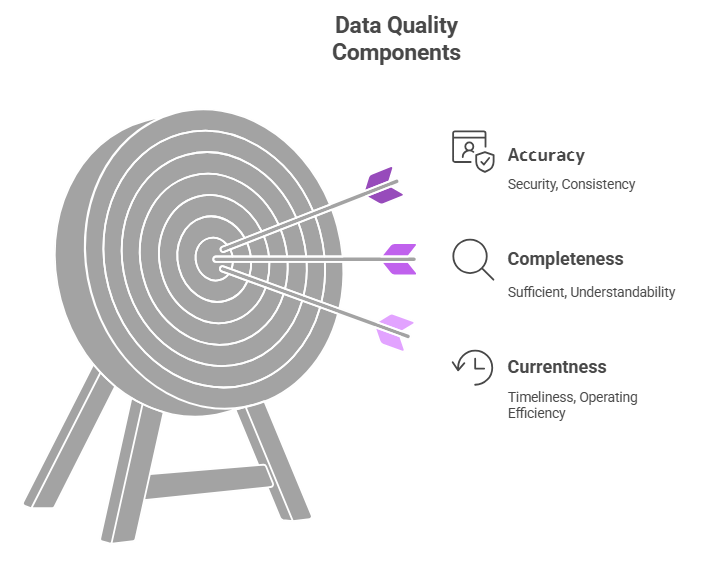

Detailed Elements of Data Quality Assessment

Data quality isn’t just a simple “Is this data good or bad?” question. Many organizations break it down into specific categories to ensure data is reliable and valuable. Let’s take a look at how different companies approach data quality management.

Hello! I’m a data steward at T Company, and today, I’d like to introduce you to the key principles of data quality assessment. Good data leads to better decisions, so it’s essential to evaluate data properly. Let’s go over the six key dimensions used to assess data quality in a structured and practical way. 1. Usability – “Is the data sufficient and useful?” ✔ Sample Data Availability – Users should be able to preview and analyze data before using it. ✔ Data Coverage Rate – The data must be extensive enough and well-integrated across multiple systems. ✔ Storage Location Accuracy – The actual storage location should match the displayed data location. ✔ Data Request & Usage Frequency – Frequently used data should be prioritized for management. ✔ Dashboard Utilization Rate – Check how effectively data visualization tools and reports are used. 2. Security – “Is the data properly protected?” ✔ PII (Personally Identifiable Information) Tagging Rate – Any data containing personal information must be clearly labeled and managed accordingly. ✔ Data Encryption Rate – Sensitive data (e.g., ID numbers, phone numbers) must always be encrypted. ✔ Confidential Data Tagging Rate – High-risk or sensitive information must be marked and managed with extra security controls. 3. Accessibility – “Is the data easy to find and understand?” ✔ Table Description & Logical Naming Rate – Each dataset should include clear descriptions and logical names for easy understanding. ✔ Attribute Description Rate – Individual data attributes should have explanatory labels to help users interpret them correctly. ✔ Tagging Rate – Relevant tags should be added to make data easily searchable. ✔ Data Catalog Registration Rate – All key datasets should be well-documented in a catalog for easy discovery and usage. 4. Timeliness – “Is the data up to date?” ✔ Data Retention Period Registration Rate – Clearly define how long data should be stored before deletion or archiving. ✔ Retention Compliance Rate – Ensure that data is stored and deleted according to the retention policy. ✔ Catalog Update Compliance Rate – Check how frequently the data catalog is updated to reflect the most current datasets. ✔ Sample Data Freshness Rate – Ensure that sample data used for analysis is always up to date. 5. Consistency – “Is the data managed uniformly?” ✔ Standardization Compliance Rate – Ensure that consistent rules, naming conventions, and formats are applied across all datasets. ✔ Operational vs. Analytical Data Consistency – Check whether data from operational systems matches data used for analysis. 6. Operational Efficiency – “Is data managed effectively?” ✔ Data Collection Frequency Compliance – Ensure that data is collected according to the planned schedule. ✔ Data Request Response Time – Measure how quickly data teams respond to user requests. ✔ System Recovery Time – Evaluate how long it takes to restore data and systems after an outage. |

Mapping Data Quality Categories in This Blog

To simplify data quality assessment, we can map key concepts into three major categories:

o Accuracy – “How reliable and correctly managed is the data?”

– Security (Protection of sensitive and personal data ensures accuracy.)

- Verifying that personal and confidential data is properly protected and managed correctly.

– Consistency (Following defined rules ensures data is always accurate.)

- Ensuring that data is structured, standardized, and follows predefined rules for accuracy.

o Completeness – “Is the data comprehensive and free of missing information?”

– Usability (Sufficiency) (Sufficient and well-connected data is essential for completeness.)

- Ensuring that the data is comprehensive, covers all necessary aspects, and is well-integrated across systems.

– Accessibility (Understandability) (Well-documented metadata makes data easier to find and use.)

- Evaluating whether metadata, descriptions, and tagging make it easy to search, understand, and use data.

o Currentness – “Is the data up to date and actively maintained?”

– Timeliness (Regular updates ensure data stays relevant and fresh.)

- Checking whether data is consistently updated and aligned with real-time needs.

– Operational Efficiency (Efficient collection and management help maintain up-to-date data.)

- Assessing whether data is being collected, maintained, and processed efficiently according to schedule.